- AI Platforms & Assistants

- OpenAI

Tranquility shouldn't require giving up your private self to a device

Comments (0) ()When you purchase through links on our site, we may earn an affiliate commission. Here’s how it works.

(Image credit: Getty Images)

(Image credit: Getty Images)

OpenAI’s CEO, Sam Altman, confirmed this week that the company is building a brand‑new AI‑first device. He says it will stand in stark contrast to the clutter and chaos of our phones and apps. Indeed, he compared using it to “sitting in the most beautiful cabin by a lake and in the mountains and sort of just enjoying the peace and calm.” But understanding you in context and analyzing your habits, moods, and routines feels more intimate than most people get with their loved ones, let alone a piece of hardware.

His framing obscures a very different reality. A device designed to monitor your life constantly, to collect details about where you are, what you do, how you speak, and more sounds suffocating. Having an electronic observer absorb every nuance of your behavior and adapt itself to your life might sound okay, until you remember what that data is going through to provide the analysis.

- Amazon Black Friday deals are live: here are our picks!

Calling a device calming is like closing your eyes and hoping you’re invisible. It’s surveillance, voluntary, but all-encompassing. The promise of serenity feels like a clever cover for surrendering privacy and worse. 24/7 context‑awareness does not equal peace.

You may like-

OpenAI’s rumored ‘always on’ AI device sounds terrifying – but Sora 2 shows it doesn’t care about boundaries

OpenAI’s rumored ‘always on’ AI device sounds terrifying – but Sora 2 shows it doesn’t care about boundaries

-

An AI executive's dire warnings about the future are chilling – but his solution is worse than the problem

An AI executive's dire warnings about the future are chilling – but his solution is worse than the problem

-

Neo robot sounds like the answer to our home chore prayers...but also a potential privacy nightmare

Neo robot sounds like the answer to our home chore prayers...but also a potential privacy nightmare

AI eyes on you

Solitude and peace rely on a feeling of security. A device that claims to give me calm by dissolving those boundaries only exposes me. Altman’s cabin‑by‑the‑lake analogy is seductive. Who hasn’t daydreamed about escaping the constant ping of notifications, the flashing ads, the algorithmic chaos of modern apps, about walking away from all that and into a peaceful retreat? But serenity built on constant observation is an illusion.

This isn’t just gizmo‑skepticism. There is a deeply rooted paradox here. The more context‑aware and responsive this device becomes, the more it knows about you. The more it knows, the more potential there is for intrusion.

The version of calm that Altman is trying to sell us is dependent on indefinite discretion. We have to trust the right people with all our data and believe that an algorithm, and the company behind it, will always handle our personal information with deference and care. We have to trust that they will never turn the data into leverage, never use it to influence our thoughts, our decisions, our politics, our shopping habits, our relationships.

That is a big ask, even before looking at Altman's history regarding intellectual property rights.

Get daily insight, inspiration and deals in your inboxContact me with news and offers from other Future brandsReceive email from us on behalf of our trusted partners or sponsorsBy submitting your information you agree to the Terms & Conditions and Privacy Policy and are aged 16 or over.See and take

Altman has repeatedly defended the use of copyrighted work for training without permission or compensation to creators. In a 2023 interview, he acknowledged that AI models have “hoovered up work from across the internet,” including copyrighted material without explicit permission, simply absorbing it en masse as training data. He tried to frame that as a problem that could be addressed only “once we figure out some sort of economic model that works for people.” He admitted that many creatives were upset, but he offered only vague promises that someday there might be something better.

He said that giving creators a chance to opt in and earn a share of revenue might be “cool,” if they choose, but declined to guarantee that such a model would ever actually be implemented. If ownership and consent are optional conveniences for creators, why would consumers be treated differently?

Remember that within hours of launch, Sora 2 was flooded with clips using copyrighted characters and well‑known franchises without permission, prompting legal backlash. The company reversed course quickly, announcing it would give rights‑holders “more granular control” and move to an opt‑in model for likeness and characters.

You may like-

OpenAI’s rumored ‘always on’ AI device sounds terrifying – but Sora 2 shows it doesn’t care about boundaries

OpenAI’s rumored ‘always on’ AI device sounds terrifying – but Sora 2 shows it doesn’t care about boundaries

-

An AI executive's dire warnings about the future are chilling – but his solution is worse than the problem

An AI executive's dire warnings about the future are chilling – but his solution is worse than the problem

-

Neo robot sounds like the answer to our home chore prayers...but also a potential privacy nightmare

Neo robot sounds like the answer to our home chore prayers...but also a potential privacy nightmare

That reversal might look like accountability. But it is also a tacit admission that the original plan was essentially to treat everyone’s creative efforts as free raw material. To treat content as something you mine, not something you respect.

Across both art and personal data, the message from Altman seems to be that access at scale is more important than consent. A device claiming to bring calm by dissolving friction and smoothing out your digital life means a device with oversight of that life. Convenience is not the same as comfort.

I am not arguing here that all AI assistants are evil. But treating AI like a toolbox is not the same as making it a confidante for every element of my life. Some might argue that if the device’s design is good, and there are real safeguards. But that argument assumes a perfect future, managed by perfect people. History isn’t on our side.

The device Altman and OpenAI plan on selling might be great for all kinds of things and well worth trading privacy for, but make that tradeoff clear. That tranquil lake may as well be a camera lens, but don't pretend the lens isn't there.

Follow TechRadar on Google News and add us as a preferred source to get our expert news, reviews, and opinion in your feeds. Make sure to click the Follow button!

And of course you can also follow TechRadar on TikTok for news, reviews, unboxings in video form, and get regular updates from us on WhatsApp too.

The best business laptops for all budgetsOur top picks, based on real-world testing and comparisons

The best business laptops for all budgetsOur top picks, based on real-world testing and comparisons➡️ Read our full guide to the best business laptops1. Best overall:Dell Precision 56902. Best on a budget:Acer Aspire 53. Best MacBook:Apple MacBook Pro 14-inch (M4)

TOPICS AI Eric Hal SchwartzSocial Links NavigationContributor

Eric Hal SchwartzSocial Links NavigationContributorEric Hal Schwartz is a freelance writer for TechRadar with more than 15 years of experience covering the intersection of the world and technology. For the last five years, he served as head writer for Voicebot.ai and was on the leading edge of reporting on generative AI and large language models. He's since become an expert on the products of generative AI models, such as OpenAI’s ChatGPT, Anthropic’s Claude, Google Gemini, and every other synthetic media tool. His experience runs the gamut of media, including print, digital, broadcast, and live events. Now, he's continuing to tell the stories people want and need to hear about the rapidly evolving AI space and its impact on their lives. Eric is based in New York City.

You must confirm your public display name before commenting

Please logout and then login again, you will then be prompted to enter your display name.

Logout Read more OpenAI’s rumored ‘always on’ AI device sounds terrifying – but Sora 2 shows it doesn’t care about boundaries

OpenAI’s rumored ‘always on’ AI device sounds terrifying – but Sora 2 shows it doesn’t care about boundaries

An AI executive's dire warnings about the future are chilling – but his solution is worse than the problem

An AI executive's dire warnings about the future are chilling – but his solution is worse than the problem

Neo robot sounds like the answer to our home chore prayers...but also a potential privacy nightmare

Neo robot sounds like the answer to our home chore prayers...but also a potential privacy nightmare

I tried Sora 2 – I love it and it’s about to ruin everything

I tried Sora 2 – I love it and it’s about to ruin everything

We're entering a new age of AI moderation, but it may be too late to rein in the chatbot beast

We're entering a new age of AI moderation, but it may be too late to rein in the chatbot beast

People are falling in love with ChatGPT, and that’s a major problem

Latest in OpenAI

People are falling in love with ChatGPT, and that’s a major problem

Latest in OpenAI

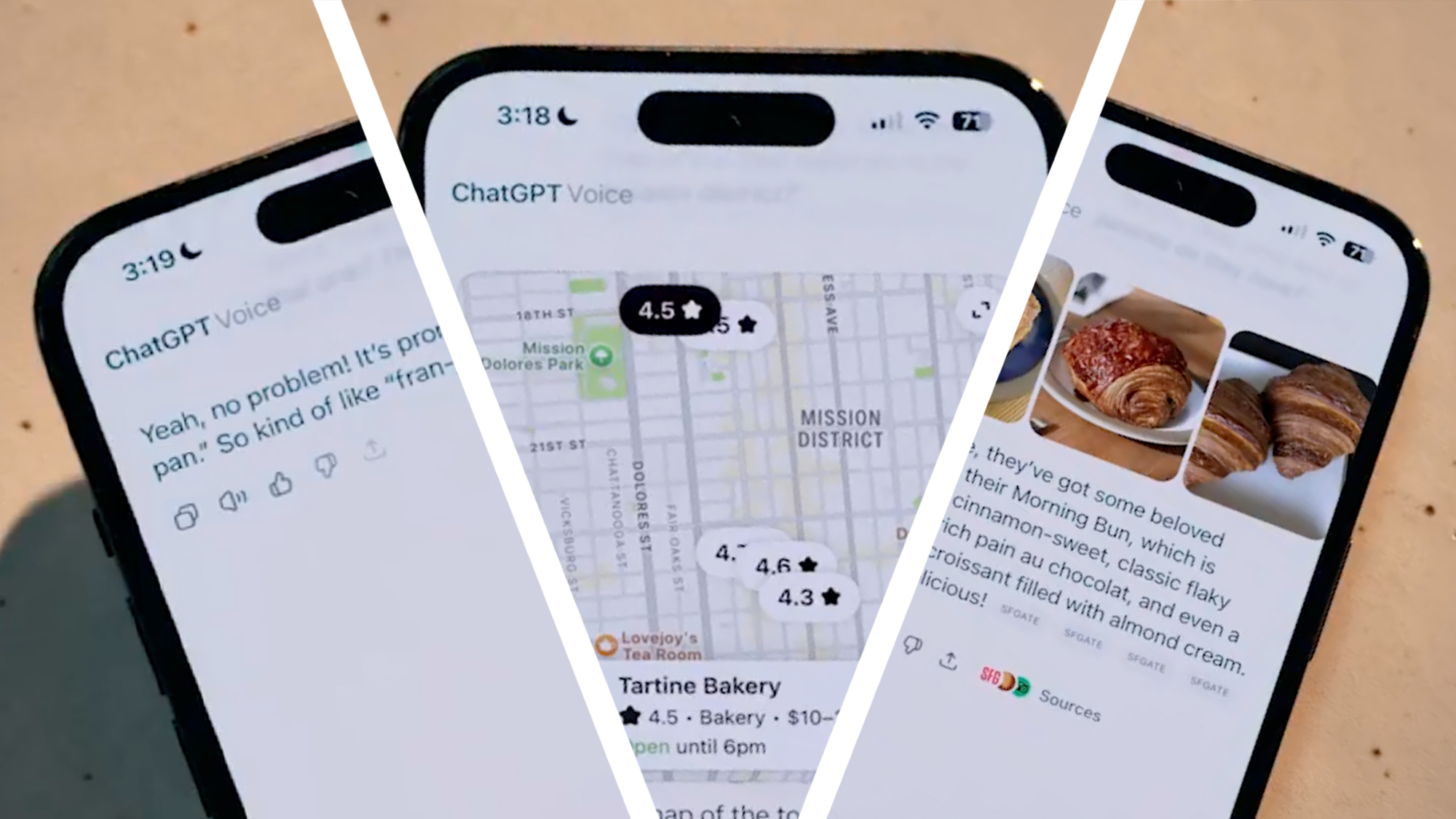

ChatGPT’s new voice integration feels like the missing piece in AI chat

ChatGPT’s new voice integration feels like the missing piece in AI chat

5 things you need to know about ChatGPT's big voice mode update

5 things you need to know about ChatGPT's big voice mode update

ChatGPT’s Agent feature lets you assign tasks and walk away – here’s how

ChatGPT’s Agent feature lets you assign tasks and walk away – here’s how

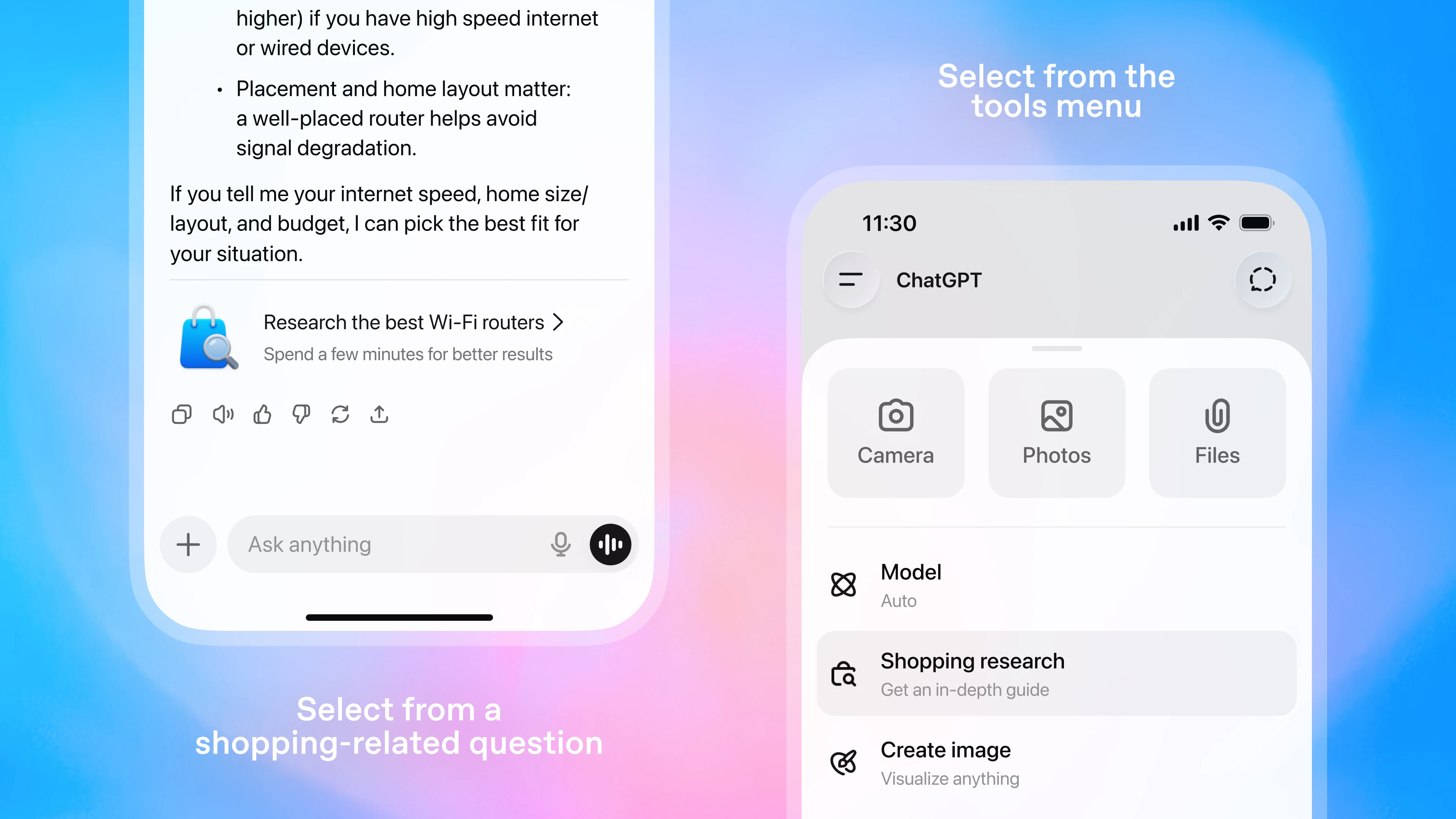

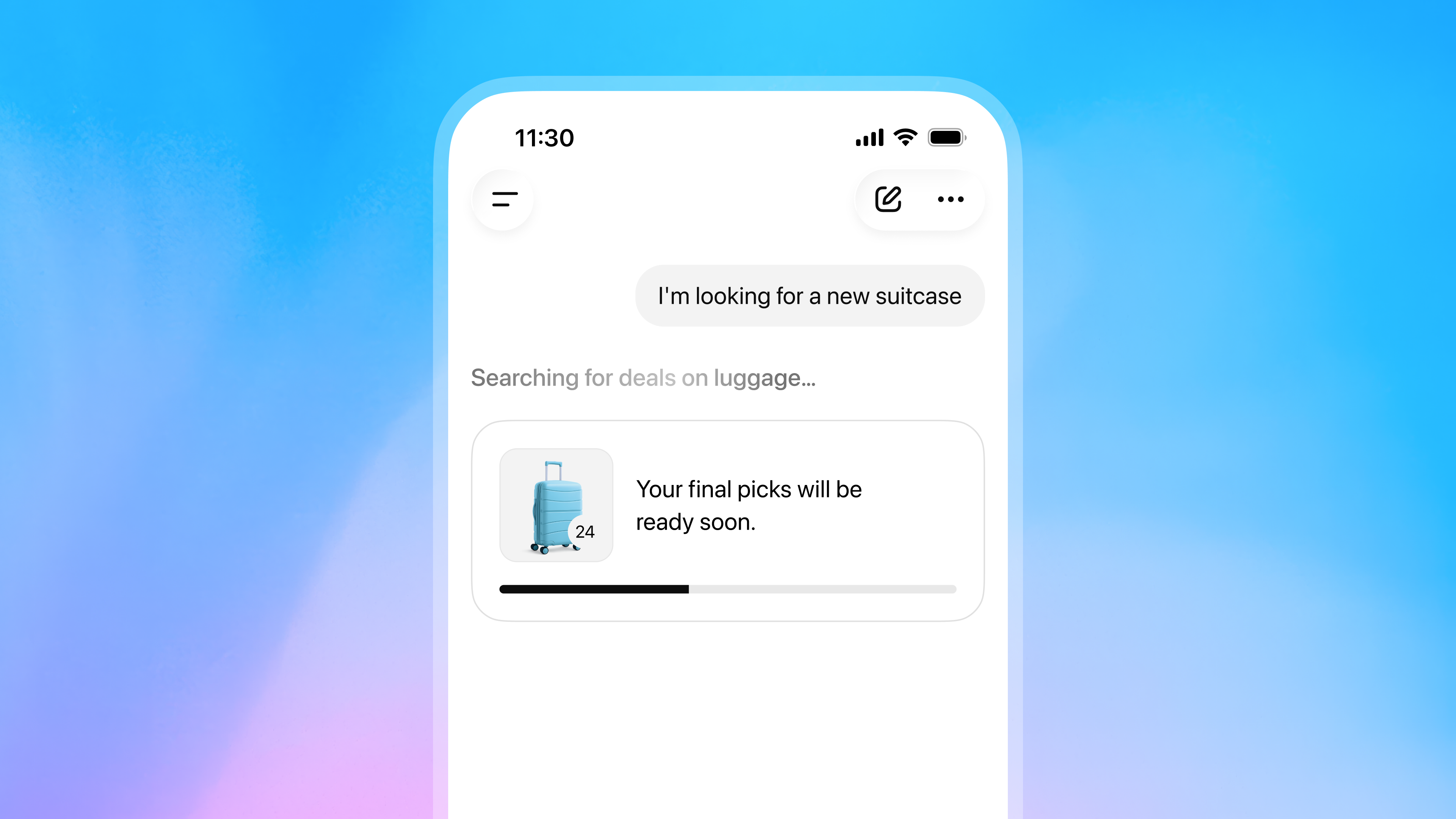

Is ChatGPT's new shopping tool the AI personal shopper I've always wanted?

Is ChatGPT's new shopping tool the AI personal shopper I've always wanted?

Sam Altman says his new AI device should feel like a "cabin by a lake"

Sam Altman says his new AI device should feel like a "cabin by a lake"

ChatGPT’s new Shopping Research tool compares products for you

Latest in Opinion

ChatGPT’s new Shopping Research tool compares products for you

Latest in Opinion

Sam Altman wants his AI device to feel like 'sitting in the most beautiful cabin by a lake,' but it sounds more like endless surveillance

Sam Altman wants his AI device to feel like 'sitting in the most beautiful cabin by a lake,' but it sounds more like endless surveillance

Please don't date your AI because it will never love you or pick up the check

Please don't date your AI because it will never love you or pick up the check

The next frontier for AI-ready connectivity: efficiency in deployment

The next frontier for AI-ready connectivity: efficiency in deployment

The new holiday shopping concierge Is AI: Why conversational search will shape Black Friday & Cyber Monday

The new holiday shopping concierge Is AI: Why conversational search will shape Black Friday & Cyber Monday

The Trump Administration just launched its own plan for global AI dominance and what could go wrong?

The Trump Administration just launched its own plan for global AI dominance and what could go wrong?

The three speeds of zero trust

LATEST ARTICLES

The three speeds of zero trust

LATEST ARTICLES- 1Got an Xbox Series X/S for Christmas? Do these 5 things right now