- Pro

- Security

Scam apps may access sensitive user data without proper consent

Comments (0) ()When you purchase through links on our site, we may earn an affiliate commission. Here’s how it works.

(Image credit: Shutterstock / Ken Stocker)

(Image credit: Shutterstock / Ken Stocker)

- Neural Techlabs repeatedly uploads apps mimicking Google Gemini and OpenAI ChatGPT

- Apps use logos, names, and interfaces to confuse unsuspecting users

- Removed apps keep reappearing, indicating flaws in Apple’s review process

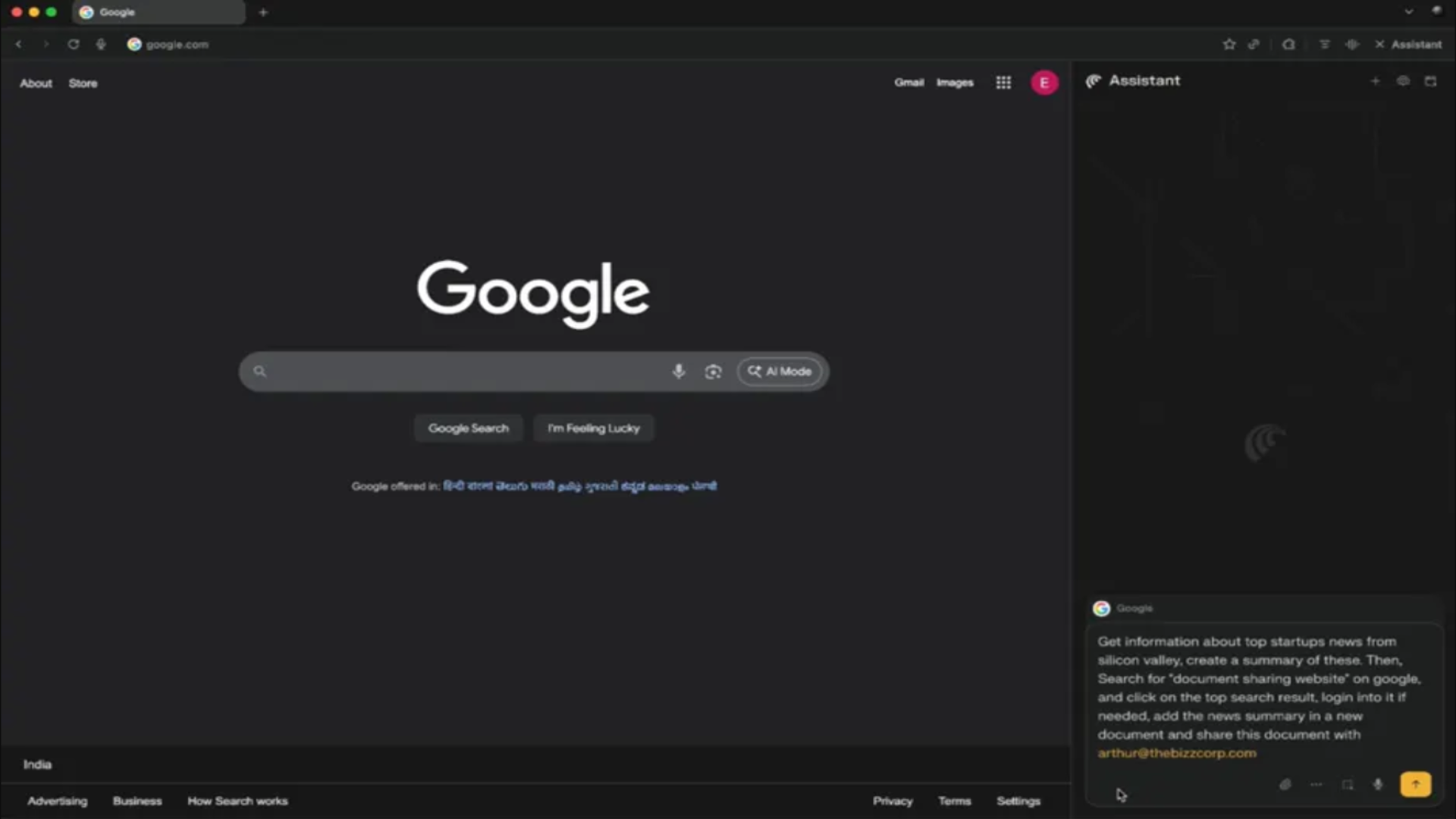

Apple’s Mac App Store is facing renewed scrutiny after several apps were discovered impersonating well-known AI products.

A developer account, Neural Techlabs, has been identified as repeatedly publishing applications that mimic official offerings from Google Gemini and OpenAI's ChatGPT.

These apps use brand logos, naming conventions, and interface elements that closely resemble legitimate software, creating a high risk of user confusion.

You may like-

Can top AI tools be bullied into malicious work? ChatGPT, Gemini, and more are put to the test, and the results are actually genuinely surprising

Can top AI tools be bullied into malicious work? ChatGPT, Gemini, and more are put to the test, and the results are actually genuinely surprising

-

OpenAI bans Chinese, North Korean hacker accounts using ChatGPT to launch surveillance

OpenAI bans Chinese, North Korean hacker accounts using ChatGPT to launch surveillance

-

Watch out - your workers might be pasting company secrets into ChatGPT

Watch out - your workers might be pasting company secrets into ChatGPT

Persistent violations despite removal

Investigations reveal multiple associated developer accounts may belong to the same group, further amplifying concerns over coordinated attempts at deception.

Although some of these apps have been removed in the past for intellectual property infringements, new iterations continue to appear on the platform.

One current example, titled “AI Chat Bot for Google Gemini”, intentionally mirrors Google’s branding and design language, making it difficult for users to distinguish it from the authentic product.

A previous app from the same developer, “AI Chat Bot Ask Assistant”, was also removed due to repeated violations of Apple’s platform rules.

Are you a pro? Subscribe to our newsletterContact me with news and offers from other Future brandsReceive email from us on behalf of our trusted partners or sponsorsBy submitting your information you agree to the Terms & Conditions and Privacy Policy and are aged 16 or over.Despite these removals, Neural Techlabs continues to publish similar apps that explicitly reference OpenAI’s ChatGPT.

Making such references in metadata or descriptions is a direct violation of OpenAI’s branding guidelines.

These impersonating apps are not only misleading but can also expose users to practical security risks, as they may unknowingly download software that interacts with sensitive information or attempts to exploit trust in recognized brands.

You may like-

Can top AI tools be bullied into malicious work? ChatGPT, Gemini, and more are put to the test, and the results are actually genuinely surprising

Can top AI tools be bullied into malicious work? ChatGPT, Gemini, and more are put to the test, and the results are actually genuinely surprising

-

OpenAI bans Chinese, North Korean hacker accounts using ChatGPT to launch surveillance

OpenAI bans Chinese, North Korean hacker accounts using ChatGPT to launch surveillance

-

Watch out - your workers might be pasting company secrets into ChatGPT

Watch out - your workers might be pasting company secrets into ChatGPT

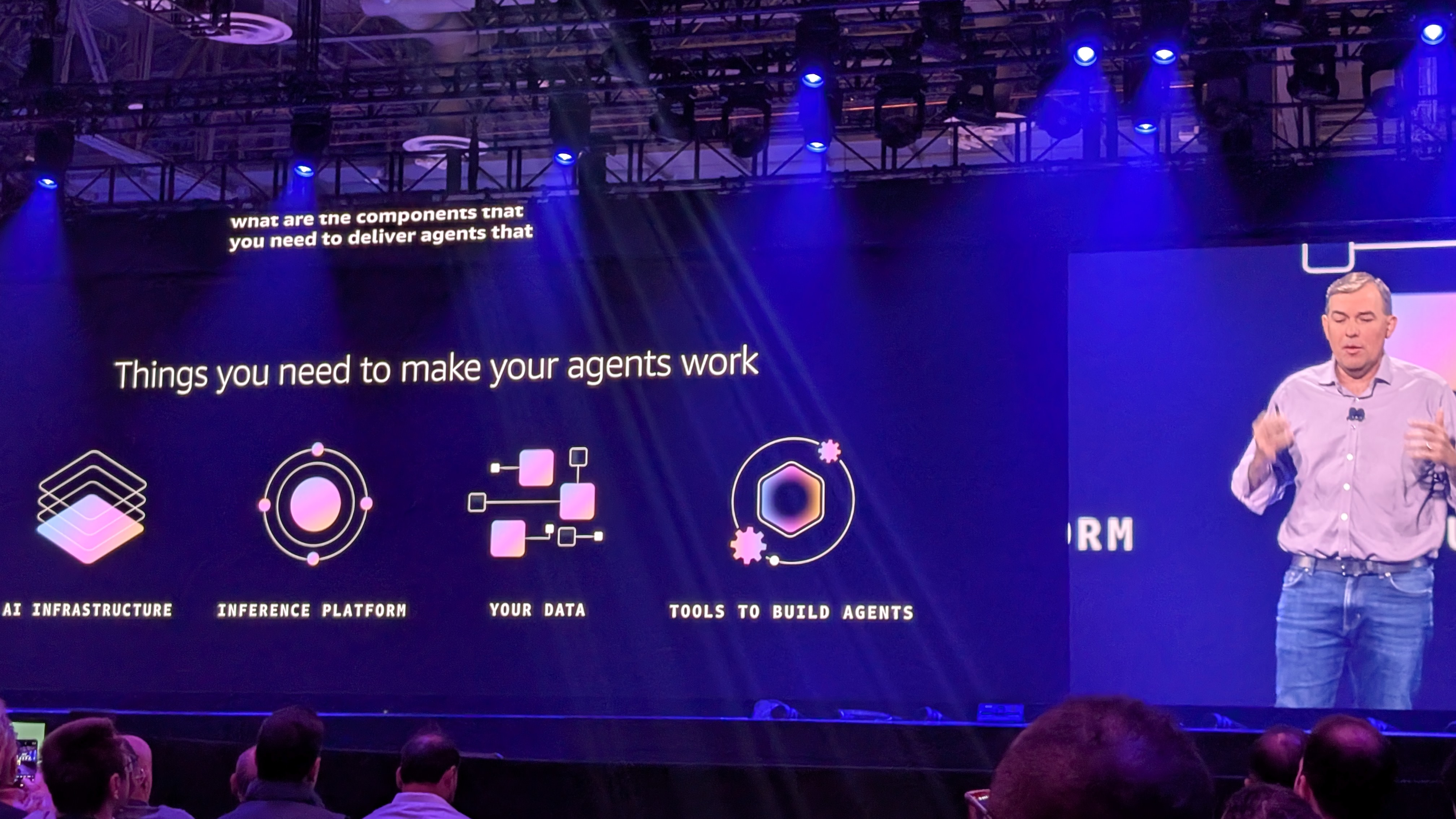

While Apple applies a review process to identify violations, the repeated re-uploading of these applications indicates potential gaps in platform oversight.

In some cases, downloaded apps could compromise devices in ways that traditional antivirus software might not immediately detect.

These risks are compounded by the apps’ ability to access AI tools or external network resources, increasing the potential for malicious behavior.

The continued presence of scam applications undermines confidence in the Mac App Store’s review mechanisms.

It also exposes the limits of current safeguards, including firewall protections against untrusted software.

Users relying on the platform for AI experiences must exercise caution, verify developer credentials, and remain aware that brand impersonation can circumvent basic security measures.

Follow TechRadar on Google News and add us as a preferred source to get our expert news, reviews, and opinion in your feeds. Make sure to click the Follow button!

And of course you can also follow TechRadar on TikTok for news, reviews, unboxings in video form, and get regular updates from us on WhatsApp too.

Efosa UdinmwenFreelance Journalist

Efosa UdinmwenFreelance JournalistEfosa has been writing about technology for over 7 years, initially driven by curiosity but now fueled by a strong passion for the field. He holds both a Master's and a PhD in sciences, which provided him with a solid foundation in analytical thinking.

You must confirm your public display name before commenting

Please logout and then login again, you will then be prompted to enter your display name.

Logout Read more Can top AI tools be bullied into malicious work? ChatGPT, Gemini, and more are put to the test, and the results are actually genuinely surprising

Can top AI tools be bullied into malicious work? ChatGPT, Gemini, and more are put to the test, and the results are actually genuinely surprising

OpenAI bans Chinese, North Korean hacker accounts using ChatGPT to launch surveillance

OpenAI bans Chinese, North Korean hacker accounts using ChatGPT to launch surveillance

Watch out - your workers might be pasting company secrets into ChatGPT

Watch out - your workers might be pasting company secrets into ChatGPT

Researchers claim ChatGPT has a whole host of worrying security flaws - here's what they found

Researchers claim ChatGPT has a whole host of worrying security flaws - here's what they found

OpenAI's shiny new Atlas browser might have some serious security shortcomings - and it's not the only one under threat from dangerous spoof attacks

OpenAI's shiny new Atlas browser might have some serious security shortcomings - and it's not the only one under threat from dangerous spoof attacks

AI scams surge: how consumers and businesses can stay safe

Latest in Security

AI scams surge: how consumers and businesses can stay safe

Latest in Security

North Korean 'fake worker' scheme caught live on camera

North Korean 'fake worker' scheme caught live on camera

Iranian hacker group deploys malicious Snake game to target Egyptian and Israeli critical infrastructure

Iranian hacker group deploys malicious Snake game to target Egyptian and Israeli critical infrastructure

New data centers will need almost triple the current energy demand by 2035

New data centers will need almost triple the current energy demand by 2035

Russian speaking hacking group now shifting focus to government targets

Russian speaking hacking group now shifting focus to government targets

Glassworm returns once again with a third round of VS code attacks

Glassworm returns once again with a third round of VS code attacks

107 Android flaws just got patched by Google - here's how to make sure you're up to date

Latest in News

107 Android flaws just got patched by Google - here's how to make sure you're up to date

Latest in News

AWS Nova Forge could be your company's cue to start building custom AI models

AWS Nova Forge could be your company's cue to start building custom AI models

ExpressVPN's latest update boosts connection speeds and revamps its Mac app

ExpressVPN's latest update boosts connection speeds and revamps its Mac app

Netflix celebrates Kpop Demon Hunters' Spotify Wrapped 2025 success with heartfelt HUNTR/X 'thank you' message – but some fans think it was made by AI

Netflix celebrates Kpop Demon Hunters' Spotify Wrapped 2025 success with heartfelt HUNTR/X 'thank you' message – but some fans think it was made by AI

Grab a free Fortnite skin with your Backbone Pro

Grab a free Fortnite skin with your Backbone Pro

Character.ai launches Stories as it scales back chat for under-18s

Character.ai launches Stories as it scales back chat for under-18s

New Windows 11 'PC you can talk to' ad pushing Copilot is proving divisive

LATEST ARTICLES

New Windows 11 'PC you can talk to' ad pushing Copilot is proving divisive

LATEST ARTICLES- 1North Korean 'fake worker' scheme caught live on camera

- 2AWS Nova Forge could be your company's cue to start building custom AI models

- 3I was hoping for Babel Fish realtime audio translation, and while the InnAIO AI Translator T9 is impressive, it's not there yet

- 4Trump's push to overrule AI regulation falters as Republicans split

- 5"A disaster waiting to happen" – The privacy tech world reacts to the new Chat Control bill